在.ssh文件夹下创建公私钥id_rsa和id_rsa.hub

ssh-keygen

cd ~/.ssh

上传公钥到服务器

ssh-copy-id -i ~/.ssh/id_rsa.pub root@server_id

ssh连接远程服务器,不用输入密码啦~

在.ssh文件夹下创建公私钥id_rsa和id_rsa.hub

ssh-keygen

cd ~/.ssh

上传公钥到服务器

ssh-copy-id -i ~/.ssh/id_rsa.pub root@server_id

ssh连接远程服务器,不用输入密码啦~

查找dockerhub上的python镜像,拉取镜像

1 | ➜ ~ docker pull python:3.8.7-slim-buster |

-it:进入容器命令行模式

–name:自定义容器名称,不用的话会自动分配一个名称

-v ~/mednli:/root/mednli:将主机目录~/mednli挂载到容器的root/mednli

python:3.8.7-slim-buster:要运行的镜像名+TAG

bash:进入容器命令行

1 | ➜ ~ docker run -it --name pytest -v ~/mednli:/root/mednli python:3.8.7-slim-buster bash |

安装需要的python包

1 | root@e408c3c08685:~# pip install -r ~/mednli/requirements.txt |

exit推出docker容器

查看容器信息

1 | ➜ ~ docker ps -a |

容器打包📦成镜像

1 | ➜ ~ docker commit -a "cbl" -m "my python env" e408c3c08685 python_env:v1 |

-a:提交的作者名称

-m:提交时的文字说明

e408c3c08685:容器ID

python_env:v1:镜像名字:TAG

git clone https://github.com/pytorch/fairseq.git

cd fairseq

pip install --editable ./

For MacOS:

CFLAGS="-stdlib=libc++" pip install --editable ./

接下来可以参考https://github.com/pytorch/fairseq/blob/master/examples/roberta/README.pretraining.md

下载数据集WikiText-103:

1 | wget https://s3.amazonaws.com/research.metamind.io/wikitext/wikitext-103-raw-v1.zip |

将数据集按照GPT-2 BPE进行编码:

1 | mkdir -p gpt2_bpe |

1 | wget -O gpt2_bpe/dict.txt https://dl.fbaipublicfiles.com/fairseq/gpt2_bpe/dict.txt |

得到data-bin/wikitext-103 文件夹,文件夹下是预处理好的数据集。

接下来可以开始训练啦,需要用到GPU资源。

1 | TOTAL_UPDATES=125000 # Total number of training steps |

如果出现如下错误信息:

1 | Error: mkl-service + Intel(R) MKL: MKL_THREADING_LAYER=INTEL is incompatible with libgomp.so.1 library. |

在*~/.bashrc* 中添加export MKL_THREADING_LAYER=GNU就可以解决:

Warning

1 | /home/User/miniconda3/lib/python3.8/site-packages/torch/nn/parallel/distributed.py:397: UserWarning: The `check_reduction` argument in `DistributedDataParallel` module is deprecated. Please avoid using it. |

8卡服务器运行:

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 0): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 1): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 2): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 6): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 5): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 3): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 4): tcp://localhost:12737

2020-12-28 15:40:38 | INFO | fairseq.distributed_utils | distributed init (rank 7): tcp://localhost:12737

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 5

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 6

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 0

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 7

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 3

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 2

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 4

2020-12-28 15:40:45 | INFO | fairseq.distributed_utils | initialized host song1-SYS-4029GP-TRT as rank 1

2020-12-28 15:40:45 | INFO | fairseq_cli.train | {‘_name’: None, ‘common’: {‘_name’: None, ‘no_progress_bar’: False, ‘log_interval’: 1, ‘log_format’: ‘simple’, ‘tensorboard_logdir’: None, ‘wandb_project’: None, ‘azureml_logging’: False, ‘seed’: 1, ‘cpu’: False, ‘tpu’: False, ‘bf16’: False, ‘memory_efficient_bf16’: False, ‘fp16’: True, ‘memory_efficient_fp16’: False, ‘fp16_no_flatten_grads’: False, ‘fp16_init_scale’: 128, ‘fp16_scale_window’: None, ‘fp16_scale_tolerance’: 0.0, ‘min_loss_scale’: 0.0001, ‘threshold_loss_scale’: None, ‘user_dir’: None, ‘empty_cache_freq’: 0, ‘all_gather_list_size’: 16384, ‘model_parallel_size’: 1, ‘quantization_config_path’: None, ‘profile’: False, ‘reset_logging’: True}, ‘common_eval’: {‘_name’: None, ‘path’: None, ‘post_process’: None, ‘quiet’: False, ‘model_overrides’: ‘{}’, ‘results_path’: None}, ‘distributed_training’: {‘_name’: None, ‘distributed_world_size’: 8, ‘distributed_rank’: 0, ‘distributed_backend’: ‘nccl’, ‘distributed_init_method’: ‘tcp://localhost:12737’, ‘distributed_port’: -1, ‘device_id’: 0, ‘distributed_no_spawn’: False, ‘ddp_backend’: ‘c10d’, ‘bucket_cap_mb’: 25, ‘fix_batches_to_gpus’: False, ‘find_unused_parameters’: False, ‘fast_stat_sync’: False, ‘heartbeat_timeout’: -1, ‘broadcast_buffers’: False, ‘distributed_wrapper’: ‘DDP’, ‘slowmo_momentum’: None, ‘slowmo_algorithm’: ‘LocalSGD’, ‘localsgd_frequency’: 3, ‘nprocs_per_node’: 8, ‘pipeline_model_parallel’: False, ‘pipeline_balance’: None, ‘pipeline_devices’: None, ‘pipeline_chunks’: 0, ‘pipeline_encoder_balance’: None, ‘pipeline_encoder_devices’: None, ‘pipeline_decoder_balance’: None, ‘pipeline_decoder_devices’: None, ‘pipeline_checkpoint’: ‘never’, ‘zero_sharding’: ‘none’, ‘tpu’: False, ‘distributed_num_procs’: 8}, ‘dataset’: {‘_name’: None, ‘num_workers’: 1, ‘skip_invalid_size_inputs_valid_test’: False, ‘max_tokens’: None, ‘batch_size’: 16, ‘required_batch_size_multiple’: 8, ‘required_seq_len_multiple’: 1, ‘dataset_impl’: None, ‘data_buffer_size’: 10, ‘train_subset’: ‘train’, ‘valid_subset’: ‘valid’, ‘validate_interval’: 1, ‘validate_interval_updates’: 0, ‘validate_after_updates’: 0, ‘fixed_validation_seed’: None, ‘disable_validation’: False, ‘max_tokens_valid’: None, ‘batch_size_valid’: 16, ‘curriculum’: 0, ‘gen_subset’: ‘test’, ‘num_shards’: 1, ‘shard_id’: 0}, ‘optimization’: {‘_name’: None, ‘max_epoch’: 0, ‘max_update’: 125000, ‘stop_time_hours’: 0.0, ‘clip_norm’: 0.0, ‘sentence_avg’: False, ‘update_freq’: [16], ‘lr’: [0.0005], ‘stop_min_lr’: -1.0, ‘use_bmuf’: False}, ‘checkpoint’: {‘_name’: None, ‘save_dir’: ‘checkpoints’, ‘restore_file’: ‘checkpoint_last.pt’, ‘finetune_from_model’: None, ‘reset_dataloader’: False, ‘reset_lr_scheduler’: False, ‘reset_meters’: False, ‘reset_optimizer’: False, ‘optimizer_overrides’: ‘{}’, ‘save_interval’: 1, ‘save_interval_updates’: 0, ‘keep_interval_updates’: -1, ‘keep_last_epochs’: -1, ‘keep_best_checkpoints’: -1, ‘no_save’: False, ‘no_epoch_checkpoints’: False, ‘no_last_checkpoints’: False, ‘no_save_optimizer_state’: False, ‘best_checkpoint_metric’: ‘loss’, ‘maximize_best_checkpoint_metric’: False, ‘patience’: -1, ‘checkpoint_suffix’: ‘’, ‘checkpoint_shard_count’: 1, ‘load_checkpoint_on_all_dp_ranks’: False, ‘model_parallel_size’: 1, ‘distributed_rank’: 0}, ‘bmuf’: {‘_name’: None, ‘block_lr’: 1.0, ‘block_momentum’: 0.875, ‘global_sync_iter’: 50, ‘warmup_iterations’: 500, ‘use_nbm’: False, ‘average_sync’: False, ‘distributed_world_size’: 8}, ‘generation’: {‘_name’: None, ‘beam’: 5, ‘nbest’: 1, ‘max_len_a’: 0.0, ‘max_len_b’: 200, ‘min_len’: 1, ‘match_source_len’: False, ‘unnormalized’: False, ‘no_early_stop’: False, ‘no_beamable_mm’: False, ‘lenpen’: 1.0, ‘unkpen’: 0.0, ‘replace_unk’: None, ‘sacrebleu’: False, ‘score_reference’: False, ‘prefix_size’: 0, ‘no_repeat_ngram_size’: 0, ‘sampling’: False, ‘sampling_topk’: -1, ‘sampling_topp’: -1.0, ‘constraints’: None, ‘temperature’: 1.0, ‘diverse_beam_groups’: -1, ‘diverse_beam_strength’: 0.5, ‘diversity_rate’: -1.0, ‘print_alignment’: None, ‘print_step’: False, ‘lm_path’: None, ‘lm_weight’: 0.0, ‘iter_decode_eos_penalty’: 0.0, ‘iter_decode_max_iter’: 10, ‘iter_decode_force_max_iter’: False, ‘iter_decode_with_beam’: 1, ‘iter_decode_with_external_reranker’: False, ‘retain_iter_history’: False, ‘retain_dropout’: False, ‘retain_dropout_modules’: None, ‘decoding_format’: None, ‘no_seed_provided’: False}, ‘eval_lm’: {‘_name’: None, ‘output_word_probs’: False, ‘output_word_stats’: False, ‘context_window’: 0, ‘softmax_batch’: 9223372036854775807}, ‘interactive’: {‘_name’: None, ‘buffer_size’: 0, ‘input’: ‘-‘}, ‘model’: Namespace(_name=’roberta_base’, activation_dropout=0.0, activation_fn=’gelu’, adam_betas=’(0.9,0.98)’, adam_eps=1e-06, all_gather_list_size=16384, arch=’roberta_base’, attention_dropout=0.1, azureml_logging=False, batch_size=16, batch_size_valid=16, best_checkpoint_metric=’loss’, bf16=False, bpe=None, broadcast_buffers=False, bucket_cap_mb=25, checkpoint_shard_count=1, checkpoint_suffix=’’, clip_norm=0.0, cpu=False, criterion=’masked_lm’, curriculum=0, data=’data-bin/wikitext-103’, data_buffer_size=10, dataset_impl=None, ddp_backend=’c10d’, device_id=0, disable_validation=False, distributed_backend=’nccl’, distributed_init_method=None, distributed_no_spawn=False, distributed_port=-1, distributed_rank=0, distributed_world_size=8, distributed_wrapper=’DDP’, dropout=0.1, empty_cache_freq=0, encoder_attention_heads=12, encoder_embed_dim=768, encoder_ffn_embed_dim=3072, encoder_layerdrop=0, encoder_layers=12, encoder_layers_to_keep=None, end_learning_rate=0.0, eos=2, fast_stat_sync=False, find_unused_parameters=False, finetune_from_model=None, fix_batches_to_gpus=False, fixed_validation_seed=None, force_anneal=None, fp16=True, fp16_init_scale=128, fp16_no_flatten_grads=False, fp16_scale_tolerance=0.0, fp16_scale_window=None, freq_weighted_replacement=False, gen_subset=’test’, heartbeat_timeout=-1, keep_best_checkpoints=-1, keep_interval_updates=-1, keep_last_epochs=-1, leave_unmasked_prob=0.1, load_checkpoint_on_all_dp_ranks=False, localsgd_frequency=3, log_format=’simple’, log_interval=1, lr=[0.0005], lr_scheduler=’polynomial_decay’, mask_multiple_length=1, mask_prob=0.15, mask_stdev=0.0, mask_whole_words=False, max_epoch=0, max_tokens=None, max_tokens_valid=None, max_update=125000, maximize_best_checkpoint_metric=False, memory_efficient_bf16=False, memory_efficient_fp16=False, min_loss_scale=0.0001, model_parallel_size=1, no_epoch_checkpoints=False, no_last_checkpoints=False, no_progress_bar=False, no_save=False, no_save_optimizer_state=False, no_seed_provided=False, nprocs_per_node=8, num_shards=1, num_workers=1, optimizer=’adam’, optimizer_overrides=’{}’, pad=1, patience=-1, pipeline_balance=None, pipeline_checkpoint=’never’, pipeline_chunks=0, pipeline_decoder_balance=None, pipeline_decoder_devices=None, pipeline_devices=None, pipeline_encoder_balance=None, pipeline_encoder_devices=None, pipeline_model_parallel=False, pooler_activation_fn=’tanh’, pooler_dropout=0.0, power=1.0, profile=False, quant_noise_pq=0, quant_noise_pq_block_size=8, quant_noise_scalar=0, quantization_config_path=None, random_token_prob=0.1, required_batch_size_multiple=8, required_seq_len_multiple=1, reset_dataloader=False, reset_logging=True, reset_lr_scheduler=False, reset_meters=False, reset_optimizer=False, restore_file=’checkpoint_last.pt’, sample_break_mode=’complete’, save_dir=’checkpoints’, save_interval=1, save_interval_updates=0, scoring=’bleu’, seed=1, sentence_avg=False, shard_id=0, shorten_data_split_list=’’, shorten_method=’none’, skip_invalid_size_inputs_valid_test=False, slowmo_algorithm=’LocalSGD’, slowmo_momentum=None, spectral_norm_classification_head=False, stop_min_lr=-1.0, stop_time_hours=0, task=’masked_lm’, tensorboard_logdir=None, threshold_loss_scale=None, tokenizer=None, tokens_per_sample=512, total_num_update=’125000’, tpu=False, train_subset=’train’, unk=3, untie_weights_roberta=False, update_freq=[16], use_bmuf=False, use_old_adam=False, user_dir=None, valid_subset=’valid’, validate_after_updates=0, validate_interval=1, validate_interval_updates=0, wandb_project=None, warmup_updates=10000, weight_decay=0.01, zero_sharding=’none’), ‘task’: Namespace(_name=’masked_lm’, activation_dropout=0.0, activation_fn=’gelu’, adam_betas=’(0.9,0.98)’, adam_eps=1e-06, all_gather_list_size=16384, arch=’roberta_base’, attention_dropout=0.1, azureml_logging=False, batch_size=16, batch_size_valid=16, best_checkpoint_metric=’loss’, bf16=False, bpe=None, broadcast_buffers=False, bucket_cap_mb=25, checkpoint_shard_count=1, checkpoint_suffix=’’, clip_norm=0.0, cpu=False, criterion=’masked_lm’, curriculum=0, data=’data-bin/wikitext-103’, data_buffer_size=10, dataset_impl=None, ddp_backend=’c10d’, device_id=0, disable_validation=False, distributed_backend=’nccl’, distributed_init_method=None, distributed_no_spawn=False, distributed_port=-1, distributed_rank=0, distributed_world_size=8, distributed_wrapper=’DDP’, dropout=0.1, empty_cache_freq=0, encoder_attention_heads=12, encoder_embed_dim=768, encoder_ffn_embed_dim=3072, encoder_layerdrop=0, encoder_layers=12, encoder_layers_to_keep=None, end_learning_rate=0.0, eos=2, fast_stat_sync=False, find_unused_parameters=False, finetune_from_model=None, fix_batches_to_gpus=False, fixed_validation_seed=None, force_anneal=None, fp16=True, fp16_init_scale=128, fp16_no_flatten_grads=False, fp16_scale_tolerance=0.0, fp16_scale_window=None, freq_weighted_replacement=False, gen_subset=’test’, heartbeat_timeout=-1, keep_best_checkpoints=-1, keep_interval_updates=-1, keep_last_epochs=-1, leave_unmasked_prob=0.1, load_checkpoint_on_all_dp_ranks=False, localsgd_frequency=3, log_format=’simple’, log_interval=1, lr=[0.0005], lr_scheduler=’polynomial_decay’, mask_multiple_length=1, mask_prob=0.15, mask_stdev=0.0, mask_whole_words=False, max_epoch=0, max_tokens=None, max_tokens_valid=None, max_update=125000, maximize_best_checkpoint_metric=False, memory_efficient_bf16=False, memory_efficient_fp16=False, min_loss_scale=0.0001, model_parallel_size=1, no_epoch_checkpoints=False, no_last_checkpoints=False, no_progress_bar=False, no_save=False, no_save_optimizer_state=False, no_seed_provided=False, nprocs_per_node=8, num_shards=1, num_workers=1, optimizer=’adam’, optimizer_overrides=’{}’, pad=1, patience=-1, pipeline_balance=None, pipeline_checkpoint=’never’, pipeline_chunks=0, pipeline_decoder_balance=None, pipeline_decoder_devices=None, pipeline_devices=None, pipeline_encoder_balance=None, pipeline_encoder_devices=None, pipeline_model_parallel=False, pooler_activation_fn=’tanh’, pooler_dropout=0.0, power=1.0, profile=False, quant_noise_pq=0, quant_noise_pq_block_size=8, quant_noise_scalar=0, quantization_config_path=None, random_token_prob=0.1, required_batch_size_multiple=8, required_seq_len_multiple=1, reset_dataloader=False, reset_logging=True, reset_lr_scheduler=False, reset_meters=False, reset_optimizer=False, restore_file=’checkpoint_last.pt’, sample_break_mode=’complete’, save_dir=’checkpoints’, save_interval=1, save_interval_updates=0, scoring=’bleu’, seed=1, sentence_avg=False, shard_id=0, shorten_data_split_list=’’, shorten_method=’none’, skip_invalid_size_inputs_valid_test=False, slowmo_algorithm=’LocalSGD’, slowmo_momentum=None, spectral_norm_classification_head=False, stop_min_lr=-1.0, stop_time_hours=0, task=’masked_lm’, tensorboard_logdir=None, threshold_loss_scale=None, tokenizer=None, tokens_per_sample=512, total_num_update=’125000’, tpu=False, train_subset=’train’, unk=3, untie_weights_roberta=False, update_freq=[16], use_bmuf=False, use_old_adam=False, user_dir=None, valid_subset=’valid’, validate_after_updates=0, validate_interval=1, validate_interval_updates=0, wandb_project=None, warmup_updates=10000, weight_decay=0.01, zero_sharding=’none’), ‘criterion’: Namespace(_name=’masked_lm’, activation_dropout=0.0, activation_fn=’gelu’, adam_betas=’(0.9,0.98)’, adam_eps=1e-06, all_gather_list_size=16384, arch=’roberta_base’, attention_dropout=0.1, azureml_logging=False, batch_size=16, batch_size_valid=16, best_checkpoint_metric=’loss’, bf16=False, bpe=None, broadcast_buffers=False, bucket_cap_mb=25, checkpoint_shard_count=1, checkpoint_suffix=’’, clip_norm=0.0, cpu=False, criterion=’masked_lm’, curriculum=0, data=’data-bin/wikitext-103’, data_buffer_size=10, dataset_impl=None, ddp_backend=’c10d’, device_id=0, disable_validation=False, distributed_backend=’nccl’, distributed_init_method=None, distributed_no_spawn=False, distributed_port=-1, distributed_rank=0, distributed_world_size=8, distributed_wrapper=’DDP’, dropout=0.1, empty_cache_freq=0, encoder_attention_heads=12, encoder_embed_dim=768, encoder_ffn_embed_dim=3072, encoder_layerdrop=0, encoder_layers=12, encoder_layers_to_keep=None, end_learning_rate=0.0, eos=2, fast_stat_sync=False, find_unused_parameters=False, finetune_from_model=None, fix_batches_to_gpus=False, fixed_validation_seed=None, force_anneal=None, fp16=True, fp16_init_scale=128, fp16_no_flatten_grads=False, fp16_scale_tolerance=0.0, fp16_scale_window=None, freq_weighted_replacement=False, gen_subset=’test’, heartbeat_timeout=-1, keep_best_checkpoints=-1, keep_interval_updates=-1, keep_last_epochs=-1, leave_unmasked_prob=0.1, load_checkpoint_on_all_dp_ranks=False, localsgd_frequency=3, log_format=’simple’, log_interval=1, lr=[0.0005], lr_scheduler=’polynomial_decay’, mask_multiple_length=1, mask_prob=0.15, mask_stdev=0.0, mask_whole_words=False, max_epoch=0, max_tokens=None, max_tokens_valid=None, max_update=125000, maximize_best_checkpoint_metric=False, memory_efficient_bf16=False, memory_efficient_fp16=False, min_loss_scale=0.0001, model_parallel_size=1, no_epoch_checkpoints=False, no_last_checkpoints=False, no_progress_bar=False, no_save=False, no_save_optimizer_state=False, no_seed_provided=False, nprocs_per_node=8, num_shards=1, num_workers=1, optimizer=’adam’, optimizer_overrides=’{}’, pad=1, patience=-1, pipeline_balance=None, pipeline_checkpoint=’never’, pipeline_chunks=0, pipeline_decoder_balance=None, pipeline_decoder_devices=None, pipeline_devices=None, pipeline_encoder_balance=None, pipeline_encoder_devices=None, pipeline_model_parallel=False, pooler_activation_fn=’tanh’, pooler_dropout=0.0, power=1.0, profile=False, quant_noise_pq=0, quant_noise_pq_block_size=8, quant_noise_scalar=0, quantization_config_path=None, random_token_prob=0.1, required_batch_size_multiple=8, required_seq_len_multiple=1, reset_dataloader=False, reset_logging=True, reset_lr_scheduler=False, reset_meters=False, reset_optimizer=False, restore_file=’checkpoint_last.pt’, sample_break_mode=’complete’, save_dir=’checkpoints’, save_interval=1, save_interval_updates=0, scoring=’bleu’, seed=1, sentence_avg=False, shard_id=0, shorten_data_split_list=’’, shorten_method=’none’, skip_invalid_size_inputs_valid_test=False, slowmo_algorithm=’LocalSGD’, slowmo_momentum=None, spectral_norm_classification_head=False, stop_min_lr=-1.0, stop_time_hours=0, task=’masked_lm’, tensorboard_logdir=None, threshold_loss_scale=None, tokenizer=None, tokens_per_sample=512, total_num_update=’125000’, tpu=False, train_subset=’train’, unk=3, untie_weights_roberta=False, update_freq=[16], use_bmuf=False, use_old_adam=False, user_dir=None, valid_subset=’valid’, validate_after_updates=0, validate_interval=1, validate_interval_updates=0, wandb_project=None, warmup_updates=10000, weight_decay=0.01, zero_sharding=’none’), ‘optimizer’: {‘_name’: ‘adam’, ‘adam_betas’: ‘(0.9,0.98)’, ‘adam_eps’: 1e-06, ‘weight_decay’: 0.01, ‘use_old_adam’: False, ‘tpu’: False, ‘lr’: [0.0005]}, ‘lr_scheduler’: {‘_name’: ‘polynomial_decay’, ‘warmup_updates’: 10000, ‘force_anneal’: None, ‘end_learning_rate’: 0.0, ‘power’: 1.0, ‘total_num_update’: 125000.0, ‘lr’: [0.0005]}, ‘scoring’: {‘_name’: ‘bleu’, ‘pad’: 1, ‘eos’: 2, ‘unk’: 3}, ‘bpe’: None, ‘tokenizer’: None}

2020-12-28 15:40:45 | INFO | fairseq.tasks.masked_lm | dictionary: 50264 types

2020-12-28 15:40:45 | INFO | fairseq.data.data_utils | loaded 3760 examples from: data-bin/wikitext-103/valid

2020-12-28 15:40:45 | INFO | fairseq.tasks.masked_lm | loaded 580 blocks from: data-bin/wikitext-103/valid

2020-12-28 15:40:51 | INFO | fairseq_cli.train | RobertaModel(

(encoder): RobertaEncoder(

(sentence_encoder): TransformerSentenceEncoder(

(dropout_module): FairseqDropout()

(embed_tokens): Embedding(50265, 768, padding_idx=1)

(embed_positions): LearnedPositionalEmbedding(514, 768, padding_idx=1)

(layers): ModuleList(

(0): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(1): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(2): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(3): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(4): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(5): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(6): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(7): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(8): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(9): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(10): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(11): TransformerSentenceEncoderLayer(

(dropout_module): FairseqDropout()

(activation_dropout_module): FairseqDropout()

(self_attn): MultiheadAttention(

(dropout_module): FairseqDropout()

(k_proj): Linear(in_features=768, out_features=768, bias=True)

(v_proj): Linear(in_features=768, out_features=768, bias=True)

(q_proj): Linear(in_features=768, out_features=768, bias=True)

(out_proj): Linear(in_features=768, out_features=768, bias=True)

)

(self_attn_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

)

(emb_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(lm_head): RobertaLMHead(

(dense): Linear(in_features=768, out_features=768, bias=True)

(layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

)

(classification_heads): ModuleDict()

)

2020-12-28 15:40:51 | INFO | fairseq_cli.train | task: MaskedLMTask

2020-12-28 15:40:51 | INFO | fairseq_cli.train | model: RobertaModel

2020-12-28 15:40:51 | INFO | fairseq_cli.train | criterion: MaskedLmLoss

2020-12-28 15:40:51 | INFO | fairseq_cli.train | num. model params: 124696665 (num. trained: 124696665)

2020-12-28 15:40:56 | INFO | fairseq.trainer | detected shared parameter: encoder.sentence_encoder.embed_tokens.weight <- encoder.lm_head.weight

2020-12-28 15:40:57 | INFO | fairseq.utils | CUDA enviroments for all 8 workers

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 0: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 1: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 2: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 3: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 4: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 5: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 6: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | rank 7: capabilities = 7.5 ; total memory = 23.653 GB ; name = TITAN RTX

2020-12-28 15:40:57 | INFO | fairseq.utils | CUDA enviroments for all 8 workers

2020-12-28 15:40:57 | INFO | fairseq_cli.train | training on 8 devices (GPUs/TPUs)

2020-12-28 15:40:57 | INFO | fairseq_cli.train | max tokens per GPU = None and batch size per GPU = 16

2020-12-28 15:40:57 | INFO | fairseq.trainer | Preparing to load checkpoint checkpoints/checkpoint_last.pt

2020-12-28 15:40:57 | INFO | fairseq.trainer | No existing checkpoint found checkpoints/checkpoint_last.pt

2020-12-28 15:40:57 | INFO | fairseq.trainer | loading train data for epoch 1

2020-12-28 15:40:57 | INFO | fairseq.data.data_utils | loaded 1801350 examples from: data-bin/wikitext-103/train

2020-12-28 15:40:57 | INFO | fairseq.tasks.masked_lm | loaded 280678 blocks from: data-bin/wikitext-103/train

fairseq模型解析https://zhuanlan.zhihu.com/p/141210591

enum的路径问题。

卸载掉enum34即可。

pip uninstall enum34

1 | apt list --installed |

1 | pip list |

查看Pytorch版本的方法:

进入python交互环境,

1 | import torch |

查看conda版本:

1 | conda --version |

查看cuda版本:

1 | nvcc -V |

1 | torch.version.cuda |

查看numpy版本

1 | pip show numpy |

download docker desktop from https://hub.docker.com/editions/community/docker-ce-desktop-mac/

double click Docker.dmg file

drag the Docker icon to the application folder

dougle click Docker.app to start Docker

check if the Docker is installed successfully in command line

docker version

docker-compose --version

docker-machine --version

How to solve command not found docker-machine error?

1 | base=https://github.com/docker/machine/releases/download/v0.16.0 && |

添加镜像加速器

注册阿里云,获取镜像加速器地址。

点击Docker图标,Preferences->Docker Engine,在右侧窗口修改json信息:

1 | { |

点击Apply and Restart

输入docker info就可以查看是否添加成功。

官网 https://hexo.io/zh-cn/docs/

安装 git

安装node.js, brew install node

安装hexo, npm install -g hexo-cli

创建个人博客目录 mkdir MyBlog

hexo init

hexo s, Hexo is running at http://localhost:4000 . Press Ctrl+C to stop. 打开网页,

博客关联到github仓库:配置_config.yml文件。

1 | # Deployment |

产生静态网页: hexo g

部署到GitHub page上: hexo d

#出现错误1:

INFO Validating config

ERROR Deployer not found: git

解决办法: npm install --save hexo-deployer-git

#出现错误2

1 | *** Please tell me who you are. |

解决办法:设置好git config

图片添加以及上载问题:

第一步:安装插件

1 | npm install https://github.com/7ym0n/hexo-asset-image --save |

第二步:打开hexo配置文件_config.yml

1 | post_asset_folder=true |

第三步:hexo n ‘add_photo’,在source/_posts下生成add_photo.md文件和add_photo文件夹。md文件中添加 ****,就可以在博客中显示图片了。

注意:需要在菜单栏中选择格式->图像->当插入本地图片时->上传图片

在文件目录下,运行

python3 -m venv env

source env/bin/activate

如果要离开虚拟环境,运行 deactivate

installing from source

git clone https://github.com/huggingface/transformers.git

cd transformers

pip install -e .

测试

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('I hate you’))”

会输出 [{'label': 'NEGATIVE', 'score': 0.9991129040718079}]

报错

pip3 install torch torchvision针对网络问题,手动下载,本地加载预训练权重

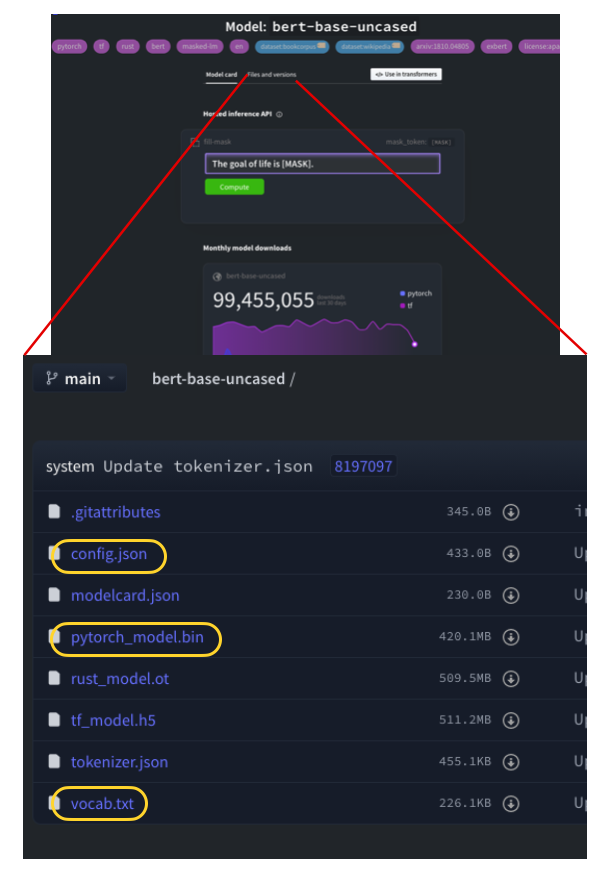

网站https://huggingface.co/models包含了transformers支持的所有模型,选中需要下载的模型。以bert-base-uncased为例。点开files and versions,可以看到文件列表,找到列表中的config.json,pytorch_model.bin和vocab文件,点击下载图标,下载到指定文件夹bert-base-uncased中。config.json是模型的配置文件,pytorch_model.bin是存储的预训练模型,vocab是字典。

如何在虚拟环境下运行jupyter notebook

参考https://www.jianshu.com/p/0432155d1bef

为虚拟环境安装ikernel包

pip3 install ipykernel

激活环境

python -m ipykernel install —name env